The Telecom industry and Communications Service Providers (CSPs) are undergoing massive operational transformation by using Software Defined Networking (SDN) and Network Functions Virtualization (NFV) to bring agility to network services and simultaneously reduce costs. As part of this trend, the use of microservices architectures and container technology solutions, such as Docker, are already underway and have proven to be effective and successful. The necessary evolution of NFV infrastructure to support cloud-native applications is gaining a strong traction at all major Telecom companies, as the new 5G core will be cloud-native from the start.

Right now, containers are not just being used for the core network or implemented for simple software development –– their use has extended to customer premises and to the edge of the network, where low latency, resiliency, and portability requirements are extremely important. Operators, for example, must be able to run and operate containerized applications.

But, given the number of containers a Telco application may consist of, and all the complex requirements, such as load balancing, monitoring, failover, and scalability, it is becoming impractical to manage each container individually and ensure that there is no downtime. For that reason, containers are now been managed by a Container Orchestration Engine such as Kubernetes (k8s). The massive infrastructure investments being made by existing and ‘new’ Telecom providers are driving the move to Kubernetes, the leading container-orchestration system.

Kubernetes is an open-source container-orchestration system for automating application deployment, scaling, and management. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). It can orchestrate containers on bare metal servers and in virtual machines. These options allow containers to be efficiently used with private clouds and public clouds such as AWS, GCP, Azure, and others.

The worldwide telecom industry is addressing the use of Kubernetes, along with cloud-native technologies, to improve both operational and development efficiency. Various open source projects, such as Open Networking Automation Platform (ONAP), SDN Enabled Broadband Access (SEBA).

So Why Kubernetes?

Kubernetes is a portable, extensible, open-source platform for managing containerized workloads and services. It manages containerized applications by providing features to run distributed systems resiliently. Here are some features it provides:

- Self-healing: Kubernetes restarts containers that fail, replaces containers, kills containers that don’t respond to user-defined health checks, and doesn’t advertise them to clients until they are ready to be placed into service.

- Resources management: Kubernetes allows you to specify how much CPU and memory (RAM) each container needs. When containers have resource requests specified, Kubernetes can make better decisions to manage the resources for containers.

- Horizontal autoscaling: Kubernetes autoscalers automatically size an application up or down based on the usage of specified resources (within defined limits).

- Service discovery and load balancing: Kubernetes can expose a container using the DNS name or using its own IP address. If traffic to a container is high, Kubernetes can load balance and distribute the network traffic so that the deployment is stable.

- Storage orchestration: Kubernetes allows you to automatically mount a storage system of your choice, such as local storages, public cloud providers, and more.

- Automated rollouts and rollbacks: With Kubernetes, you can describe the desired state for your deployed containers, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers and move all their resources to the new container.

How Kubernetes works?

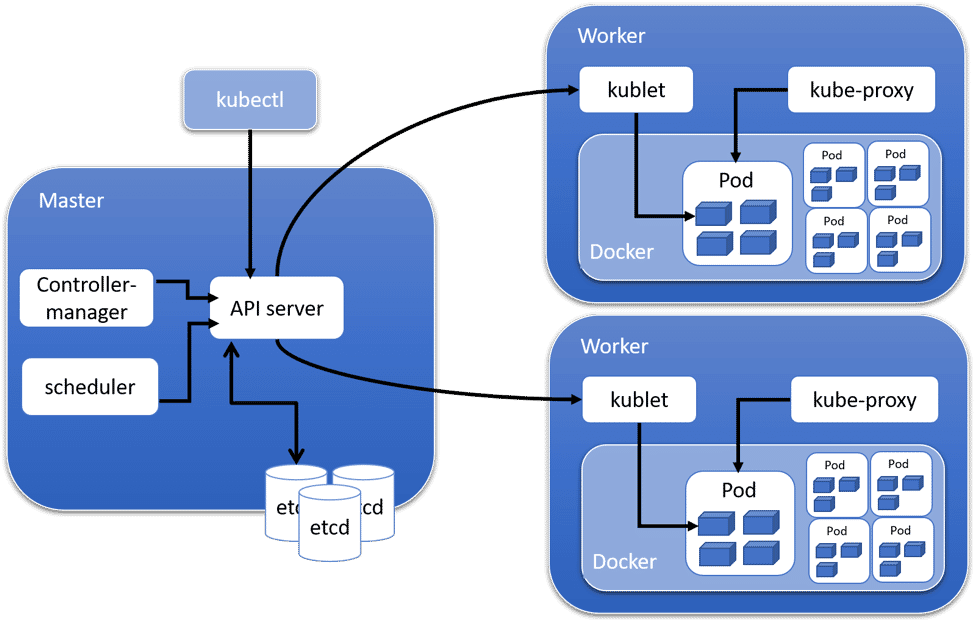

A Kubernetes cluster orchestration system consists of at least one cluster master and multiple worker machines called nodes. When you interact with k8s, you are communicating with your cluster’s Kubernetes master. The k8s master node is responsible for maintaining the desired state for your cluster, while the worker nodes in a cluster are the machines (VMs, physical servers, etc.) that run your applications and cloud workflows. The following is a high-level Kubernetes architecture showing a cluster with a master and two worker nodes.

The k8s Master node consists of a couple of components including:

- kube-apiserver: Exposes the Kubernetes API. It is the front-end for the Kubernetes control plane. It is the central management entity that receives all REST (Representational State Transfer) requests.

- etcd: A simple, distributed key value storage which is used to store the Kubernetes cluster data, API objects, and service discovery details.

- kube-controller-manager: Runs several distinct controller processes in the background to regulate the shared state of the cluster and perform routine tasks. When a change in a service configuration occurs, the controller spots the change and starts working towards the new desired state.

- kube-scheduler: Watches newly created pods that have no node assigned and selects, based on resource utilization, a node for them to run on. It reads the service’s operational requirements and schedules it on the best fit node.

The k8s Workers consists of three components:

- kublet: An agent that runs on each worker node in the cluster and makes sure that pods and their containers are healthy and running in the desired state.

- kube-proxy: A proxy service that runs on each worker node to deal with individual host subnetting and to expose services to the external world. It performs request forwarding to the correct pods/containers across the various isolated networks in a cluster.

- Container runtime: A container engine such as Docker to run containerized applications. Kubernetes contains several abstractions that represent the state of your system: deployed containerized applications and workloads, their associated network and disk resources, and other information about what your cluster is doing. These abstractions are represented by objects in the Kubernetes API as for example: Pod, Service, Volume, Namespace, ReplicaSet, Deployment, etc.

IP Infusion Innovations is looking forward to the ongoing growth of cloud-native technologies, and most specifically Kubernetes, in the Telecom sector. We actively work with companies to implement SDN solutions that use cloud-native technologies and deploy containerized applications in k8s clusters.