Building a Future-Ready AI Network

Building a high-performance AI data center is a critical investment for enterprises aiming to lead in the AI era. While GPUs provide computational power, the network serves as the backbone, ensuring seamless data flow to optimize return on investment (ROI). A suboptimal network can reduce GPU efficiency by up to 50% during the compute-exchange-update phase, as RoCEv2-sensitive AI workloads demand lossless, low-latency fabrics to minimize Job Completion Time (JCT).

This framework, grounded in 2025 industry insights, helps enterprises design a future-ready AI network by balancing performance, cost, and flexibility. Hyperscalers like Google, Meta, and Amazon have leveraged whitebox networking for scale and efficiency. Enterprises can now adopt similar strategies using solutions like OcNOS, SONiC, or proprietary systems, each offering distinct trade-offs for Data Center Interconnect (DCI) and AI workloads.

Core Decision Axes

Selecting an AI network involves evaluating four key trade-offs that shape your data center strategy:

Strategic Control vs. Simplicity: Prioritize full stack ownership for flexibility or a single-vendor solution for ease of operation.

Performance vs. Cost: Balance high scalability at low cost per port with premium, pre-integrated performance.

Innovation vs. Stability: Adopt cutting-edge technology for agility or proven ecosystems for reliability.

Openness vs. Validation: Choose open, interoperable architectures or pre-validated, cohesive stacks.

Strategic Archetypes

Align your organization with one of four archetypes, each designed to support AI excellence and robust DCI:

Proprietary – Vendor-Locked (e.g., Cisco/Arista): Offers reliability and integrated support with CLI expertise. Path: Use Cisco Nexus or Arista 7000 series; DCI via ACI multi-site or CloudVision with EVPN/VXLAN. Pros: High reliability, streamlined support. Cons: Higher costs, vendor lock-in. Best for: Enterprises prioritizing turnkey solutions.

Open – Commercial OcNOS: Provides cost savings and openness with enterprise-grade support. Path: OcNOS on whitebox hardware (e.g., Edgecore, UfiSpace); DCI with IPoDWDM and 400G ZR/ZR+ optics (e.g., Smartoptics, Ciena). Offers up to 40-60% TCO savings with perpetual licensing. Pros: Cost-efficient, robust support via IP Infusion’s partner network. Cons: Commercial software, not open source. Best for: Enterprises seeking value and scalability with reliable support.

Open – Commercial SONiC: Combines open-source flexibility with vendor-backed support. Path: Commercial SONiC distributions (e.g., Broadcom, NVIDIA) on whitebox hardware; DCI with SR-MPLS and 400G ZR+ optics. Pros: High flexibility, growing ecosystem. Cons: Higher licensing costs, emerging support. Best for: Organizations valuing open ecosystems with vendor support.

Open DIY (SONiC): Leverages open-source SONiC for custom, large-scale fabrics. Path: Open-source SONiC/FRR on whitebox; DCI with custom SR-MPLS and 400G optics. Pros: Maximum control, cost-effective for large-scale deployments. Cons: High operational complexity, requires significant R&D. Best for: Hyperscaler-like firms with advanced engineering resources.

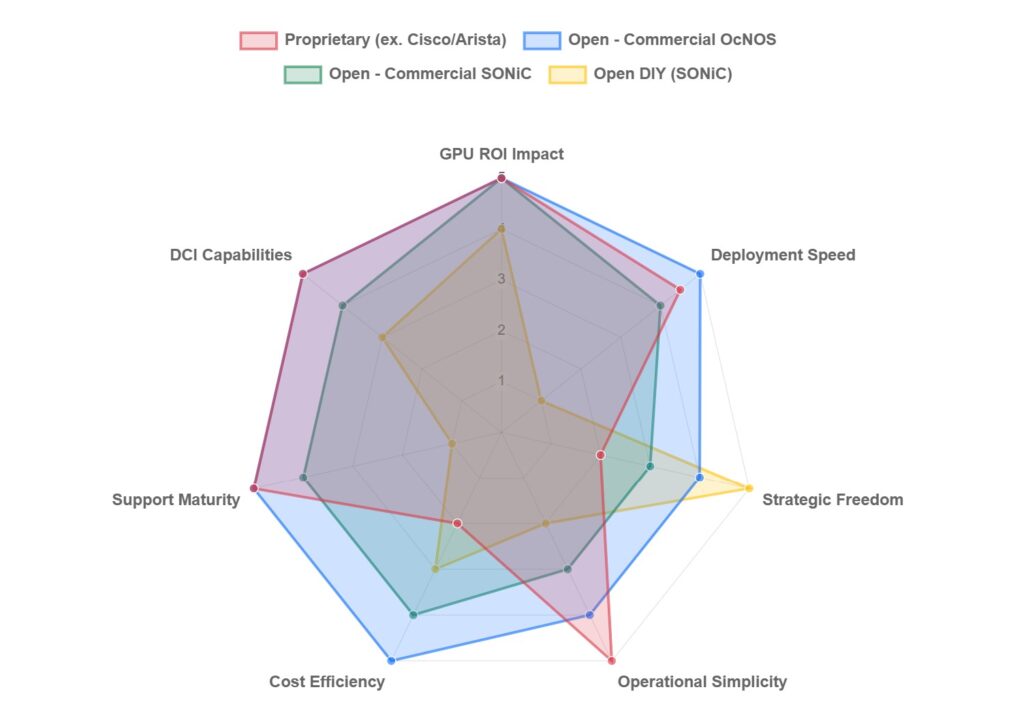

Trade-Off Comparison

Decision Tree

Navigate your archetype with this revised decision tree:

- Start: What is your primary operational model for an AI network?

- A) Turnkey Simplicity: We prefer a pre-integrated, single-vendor stack with streamlined support.

- Result → Proprietary (Cisco/Arista): Best for enterprises seeking maximum reliability and operational ease with minimal integration effort.

- B) Open Architecture: We want to avoid vendor lock-in and leverage a disaggregated model.

- Next Question: What is your in-house technical capability?

- A) Enterprise-Focused: We need a commercially supported, pre-validated open solution.

- Next Question: What is the priority for your commercial open solution?

- A) Mature Ecosystem & Cost-Efficiency: We value a solution with a traditional CLI, broad partner support, and favorable TCO.

- Result → Open – Commercial OcNOS: Suited for balancing performance, scalability, and cost with robust, enterprise-grade support.

- B) Linux-Native Flexibility: We prioritize a cutting-edge, Linux-native environment and a rapidly growing open-source community.

- Result → Open – Commercial SONiC: A strong choice for organizations with Linux/automation skills seeking maximum flexibility from a modern NOS.

- A) Mature Ecosystem & Cost-Efficiency: We value a solution with a traditional CLI, broad partner support, and favorable TCO.

- Next Question: What is the priority for your commercial open solution?

- B) Extensive R&D: We can build, customize, and maintain our own Network Operating System (NOS).

- Result → Open DIY (SONiC): Ideal for hyperscaler-like organizations requiring absolute control and with deep engineering resources.

- A) Enterprise-Focused: We need a commercially supported, pre-validated open solution.

- Next Question: What is your in-house technical capability?

- A) Turnkey Simplicity: We prefer a pre-integrated, single-vendor stack with streamlined support.

Decision Table Summary

Exploring OcNOS for AI

OcNOS, developed by IP Infusion, is a network operating system designed to streamline AI fabric operations. It supports a complete AI stack, standard CLI, and integrates with whitebox hardware. Additionally, OcNOS offers robust DCI through cost-efficient IPoDWDM. Industry benchmarks suggest potential TCO savings of 40-60% compared to proprietary solutions, enhanced by perpetual licensing. IP Infusion’s partner network provides global and localized support. A free OcNOS Virtual Machine is available for validation.

Action Plan

To build an AI network that maximizes GPU efficiency and scales dynamically:

- Identify Your Archetype: Use the decision tree to align with your priorities, whether reliability, cost, or flexibility.

- Evaluate Options: Test solutions in your environment. Download the free OcNOS Virtual Machine, explore SONiC’s open-source community, or trial proprietary systems like Cisco Nexus or Arista 7000.

- Compare TCO and Support: Request quotes from IP Infusion (OcNOS), commercial SONiC vendors (e.g., Broadcom, NVIDIA), and proprietary vendors (Cisco, Arista) to assess long-term costs, licensing models, and support reliability.

- Pilot for Validation: Deploy a small-scale AI fabric to measure JCT, latency, and operational fit.

This framework empowers enterprises to build a future-ready AI network. For tailored guidance on OcNOS, contact IP Infusion, or explore resources from SONiC’s community or established vendors to ensure the best fit for your needs.

For organizations that need to balance performance with rapid deployment, long-term flexibility, and financial prudence, the Commercial Open model provides the most logical and powerful path forward. Ready to discuss an architecture that delivers on speed, ROI, and freedom?

- Join Our Webinar on September 10th to hear live about the latest OcNOS AI features and use cases

- Learn more about OcNOS AI Fabric in our latest solution brief

- Speak with our engineers

Victor Khen is the Partner Marketing Manager for IP Infusion.